This post has results for the Insert Benchmark on a small server with a cached workload. The goal is to compare MariaDB and MySQL.

This work was done by Small Datum LLC and sponsored by the MariaDB Foundation.

The workload here has some concurrency (4 clients) and the database is cached. The results might be different when the workload is IO-bound or has more concurrency. Results were recently shared for a workload with low concurrency (1 client),

The results here are similar to the results on the low-concurrency benchmark.

tl;dr

- Modern MariaDB (11.4.1) is faster than modern MySQL (8.0.36) on all benchmark steps except for qr* (range query) and l.x (create index) where they have similar performance.

- Modern MariaDB (11.4.1) was at most 12% slower than older MariaDB (10.2.44). MariaDB has done a great job of avoiding performance regressions over time.

- There are significant performance regressions from older MySQL (5.6) to modern MySQL (8.0)

- Configuring MariaDB 10.4 to make it closer to 10.5 (only one InnoDB buffer pool, only one redo log file) reduces throughput by ~5% on several benchmark steps

This report has results for InnoDB from:

- MySQL - versions 5.6.51, 5.7.44 and 8.0.36

- MariaDB - versions 10.2.44, 10.3.39, 10.4.33, 10.5.24, 10.6.17, 10.11.7, 11.4.1. Versions 10.2, 10.3, 10.4, 10.5, 10.6 and 10.11 are the most recent LTS releases and 11.4 will be the next LTS release.

I started with the my.cnf.cz11a_bee config and then began to make small changes. For all configs I set these values to limit the size of the history list which also keeps the database from growing larger than expected. I rarely did this in the past.

innodb_max_purge_lag=500000innodb_max_purge_lag_delay=1000000

Some of the changes were challenging when trying to make things comparable.

- the InnoDB change buffer was removed in MariaDB 11.4.

- I disable it in all my.cnf files for all MariaDB versions except for the my.cnf.cz11d and my.cnf.cz11d1 configs.

- I don't disable it for the MySQL configs named my.cnf.cz11[abc]_bee but I do disable it for the my.cnf.cz11d_bee config used by MySQL. The result is that for MariaDB the my.cnf.cz11d_bee config enables the change buffer while for MySQL it disables it. Sorry for the confusion.

- innodb_buffer_pool_instances was removed in MariaDB 10.5 (assume it is =1).

- I don't set it to =1 in the my.cnf.cz[abc]_bee configs for MariaDB 10.2, 10.3, 10.4

- innodb_flush_method was removed in MariaDB 11.4 and there is a new way to configure this.

- In 11.4.1 there is an equivalent of =O_DIRECT but not of =O_DIRECT_NO_FSYNC

- my.cnf.cz11a_bee - uses innodb_flush_method=O_DIRECT_NO_FSYNC

- Two configs that are only used with MariaDB 10.4 to make it closer to 10.5+

- my.cnf.cz11abpi1_bee - like my.cnf.cz11a_bee except sets innodb_buffer_pool_instances and innodb_page_cleaners to =1. This config is used for makes MairaDB 10.4

- my.cnf.cz11aredo1_bee - like my.cnf.cz11a_bee except uses one big InnoDB redo log instead of 10 smaller ones

- my.cnf.cz11b_bee - uses innodb_flush_method=O_DIRECT

- my.cnf.cz11c_bee - uses innodb_flush_method=fsync

- my.cnf.cz11d_bee - uses innodb_flush_method=O_DIRECT_NO_FSYNC and enables the InnoDB change buffer

- my.cnf.cz11a_bee - uses innodb_flush_method=O_DIRECT_NO_FSYNC

- my.cnf.cz11b_bee - uses innodb_flush_method=O_DIRECT

- my.cnf.cz11c_bee - uses innodb_flush_method=fsync

- my.cnf.cz11d_bee - uses innodb_flush_method=O_DIRECT_NO_FSYNC and disables the InnoDB change buffer. Note the my.cnf.cz11[abc]_bee configs for MySQL enabled it. This is the opposite of what is done for MariaDB.

The benchmark steps are:

- l.i0

- insert 8 million rows per table in PK order (32M rows in total). Each table has a PK index but no secondary indexes. There is one connection per client.

- l.x

- create 3 secondary indexes per table. There is one connection per client.

- l.i1

- use 2 connections/client. One inserts 40M rows and the other does deletes at the same rate as the inserts. Each transaction modifies 50 rows (big transactions). This step is run for a fixed number of inserts, so the run time varies depending on the insert rate.

- l.i2

- like l.i1 but each transaction modifies 5 rows (small transactions) and 10M rows are inserted and deleted.

- Wait for X seconds after the step finishes to reduce variance during the read-write benchmark steps that follow. The value of X is a function of the table size.

- qr100

- use 3 connections/client. One does range queries and performance is reported for this. The second does does 100 inserts/s and the third does 100 deletes/s. The second and third are less busy than the first. The range queries use covering secondary indexes. This step is run for 1800 seconds. If the target insert rate is not sustained then that is considered to be an SLA failure. If the target insert rate is sustained then the step does the same number of inserts for all systems tested.

- qp100

- like qr100 except uses point queries on the PK index

- qr500

- like qr100 but the insert and delete rates are increased from 100/s to 500/s

- qp500

- like qp100 but the insert and delete rates are increased from 100/s to 500/s

- qr1000

- like qr100 but the insert and delete rates are increased from 100/s to 1000/s

- qp1000

- like qp100 but the insert and delete rates are increased from 100/s to 1000/s

- MariaDB 10.11, MariaDB 11.4, MySQL 8.0 release+config variations

- most MariaDB release+config variations

- MariaDB 10.4 release+config variations

- MariaDB 10.11 release+config variations

- MariaDB 11.4 release+config variations

- most MySQL release+config variations

- all DBMS

- insert/s for l.i0, l.i1, l.i2

- indexed rows/s for l.x

- range queries/s for qr100, qr500, qr1000

- point queries/s for qp100, qp500, qp1000

- The base case is MariaDB 10.11.7 with the cz11a_bee config (ma101107_rel.cz11a_bee). It is compared with

- MariaDB 11.4.1 with the cz11b_bee config (ma110401_rel.cz11b_bee)

- MySQL 8.0.36 with the cz11a_bee config (my8036_rel.cz11a_bee)

- Relative throughput per benchmark step

- l.i0

- relative QPS is 0.99 in MariaDB 11.4.1

- relative QPS is 0.75 in MySQL 8.0.36

- l.x - I ignore this for now

- l.i1, l.i2

- relative QPS is 0.88, 0.98 in MariaDB 11.4.1

- relative QPS is 0.52, 0.54 in MySQL 8.0.36

- qr100, qr500, qr1000

- relative QPS is 0.99, 1.01, 1.00 in MariaDB 11.4.1

- relative QPS is 1.08, 1.05, 1.06 in MySQL 8.0.36

- qp100, qp500, qp1000

- relative QPS is 0.98, 1.00, 1.00 in MariaDB 11.4.1

- relative QPS is 0.77, 0.79, 0.77 in MySQL 8.0.36

- The base case is MariaDB 10.2.44 with the cz11a_bee config (ma100244_rel.cz11a_bee). It is compared with more recent LTS releases from 10.3, 10.4, 10.5, 10.6, 10.11 and 11.4.

- Throughput per benchmark step for 11.4.1 relative to 10.2.44

- l.i0

- relative QPS is 0.88 in MariaDB 11.4.1

- l.x - I ignore this for now

- l.i1, l.i2

- relative QPS is 0.99, 1.09 in MariaDB 11.4.1

- qr100, qr500, qr1000

- relative QPS is 0.88, 0.93, 0.92 in MariaDB 11.4.1

- qp100, qp500, qp1000

- relative QPS is 0.93, 0.98, 1.00 in MariaDB 11.4.1

- The base case is MariaDB 10.4.33 with the cz11a_bee config (ma100433_rel.cz11a_bee).

- It is compared with MariaDB 10.4.33 using the cz11abpi1_bee and cz11aredo1 configs

- MariaDB with the cz11abpi1_bee config does worse on the l.i1 and l.i2 benchmark steps

- There might be more contention on the one buffer pool instance

- From the metrics with the l.i1 benchmark step I see larger values for context switches per query (cspq) and CPU per query (cpupq). I also see a lower CPU utilization (cpups) and write back rate (wmbps is storage MB/s written)

- MariaDB with the cz11aredo1_bee config does worse on the qp* benchmark steps

- From the metrics with the qp* benchmark steps I see a larger value for CPU per query (cpupq)

- Throughput per benchmark step relative to the base case

- l.i0

- relative QPS is 0.99 with cz11abpi1_bee

- relative QPS is 1.01 with cz11aredo1_bee

- l.x - I ignore this for now

- l.i1, l.i2

- relative QPS is 0.90, 0.94 with cz11abpi1_bee

- relative QPS is 1.00, 1.00 with cz11aredo1_bee

- qr100, qr500, qr1000

- relative QPS is 1.00, 1.00, 1.01 with cz11abpi1_bee

- relative QPS is 0.99, 0.98, 0.99 with cz11aredo1_bee

- qp100, qp500, qp1000

- relative QPS is 0.99, 1.01, 1.00 with cz11abpi1_bee

- relative QPS is 0.95, 0.95, 0.95 with cz11aredo1_bee

- The base case is MariaDB 10.11.7 with the cz11a_bee config (ma101107_rel.cz11a_bee) and 10.11.7 releases with other configs are compared to it.

- The results here are similar to the low-concurrency results except that the results here for the cz11b_bee and cz11c_bee configs are much worse for several of the write-heavy steps than they are in the low-concurrency test.

From the summary:

- The base case is MariaDB 11.4.1 with the cz11b_bee config (ma110401.cz11b_bee) and 11.4.1 releases with the cz11c_bee config is compared to it. Note that 11.4.1 does not support the equivalent of O_DIRECT_NO_FSYNC for innodb_flush_method.

- For the cz11c_bee config

- Performance for the write heavy steps (l.i0, l.i1, l.i2) is ~10% worse than the base case. This issue doesn't repeat on the low-concurrency results.

- Performance for qp100 is ~7% worse than the base case. This is similar to the low-concurrency results.

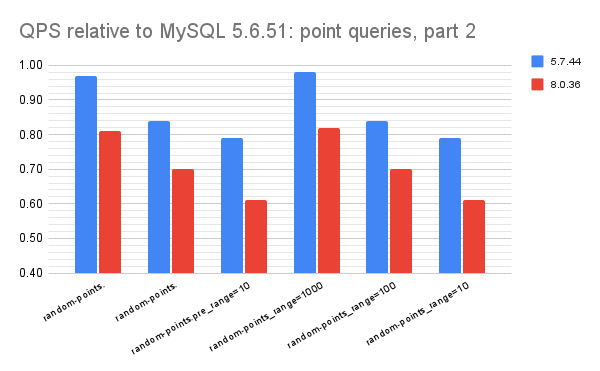

- The base case is MySQL 5.6.51 with the cz11a_bee config (my5651_rel.cz11a_bee) and it is compared to MySQL 5.7.44 and 8.0.36.

- Relative throughput per benchmark step

- l.i0

- relative QPS is 0.84 in MySQL 5.7.44

- relative QPS is 0.60 in MySQL 8.0.36

- l.x - I ignore this for now

- l.i1, l.i2

- relative QPS is 1.33, 1.17 in MySQL 5.7.44

- relative QPS is 0.78, 0.72 in MySQL 8.0.36

- qr100, qr500, qr1000

- relative QPS is 0.79, 0.80, 0.80 in MySQL 5.7.44

- relative QPS is 0.69, 0.68, 0.69 in MySQL 8.0.36

- qp100, qp500, qp1000

- relative QPS is 0.82, 0.86, 0.81 in MySQL 5.7.44

- relative QPS is 0.64, 0.67, 0.64 in MySQL 8.0.36

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)